Understanding Kafka Replication Factory and Partitions

Kafka is a distributed event store and event streaming platform, so it is developed to be fault-tolerant in a distributed server running in many nodes. The concept of Kafka Replication Factory is some of the main configurations to achieve this.

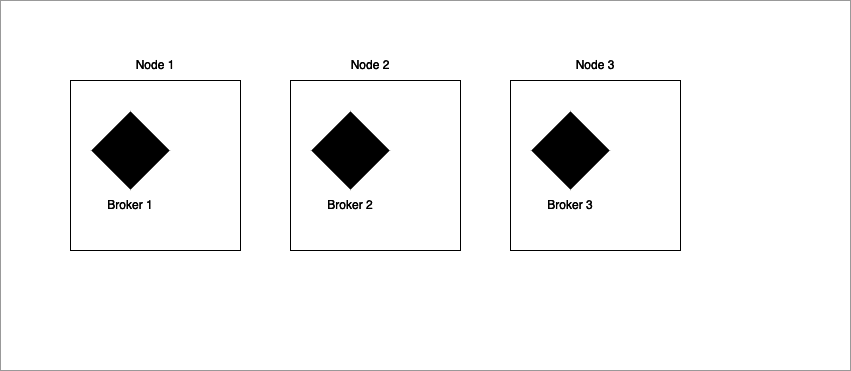

Let’s say that we have a Kafka instance running in a 3 nodes server, as the image below. It is even more common, depending on your application, to run in more nodes, but lets keep it simple with just three nodes. We already know that every system or application is susceptible to becoming unavailable or inactive due to some misconfiguration or an unexpected event.

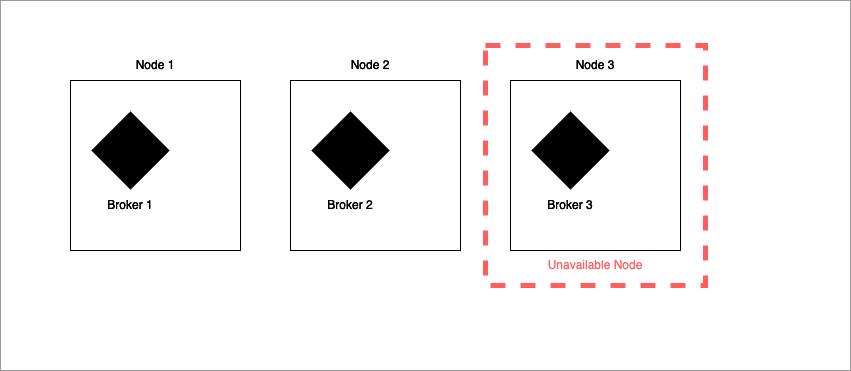

This is not different for kafka application, you likely has many producers and consumers interacting with events connected to many brokers, this brokers (running in nodes) can become unavailable, as the image below. In this case, Kafka has some configurations to deal with this scenario, to handle with consumers and producers to keep working, this is where Partitions and Replication Factory comes in.

Partitions

In Kafka, event messages are essentially logs files containing all the events, separated and organized into partitions. You can imagine partitions as office drawers with dividers to separate papers, like, paper related to company A in one divisor, company B in another divisor, etc. This is a simple resume about partitions in Kafka, you can see more in details in my another blog just about partitions.

So, with all this events separated in partitions, Kafka has to guarantee that this info can not be lost if a node stops works, because a consumer can be dependent of this information to execute some business logic.

This is where the Replication Factory comes into play.

Replication Factory

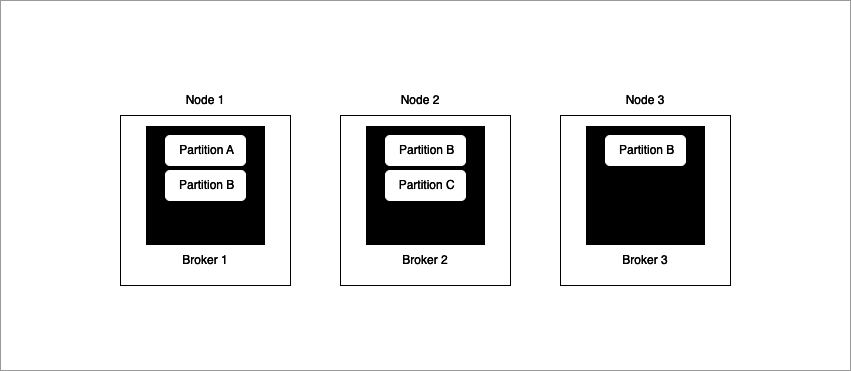

It is the mechanism to replicate the partitions messages in other Kafka brokers, the number defined in this field represents the number of brokers to which the message will be replicated. The image below show this example.

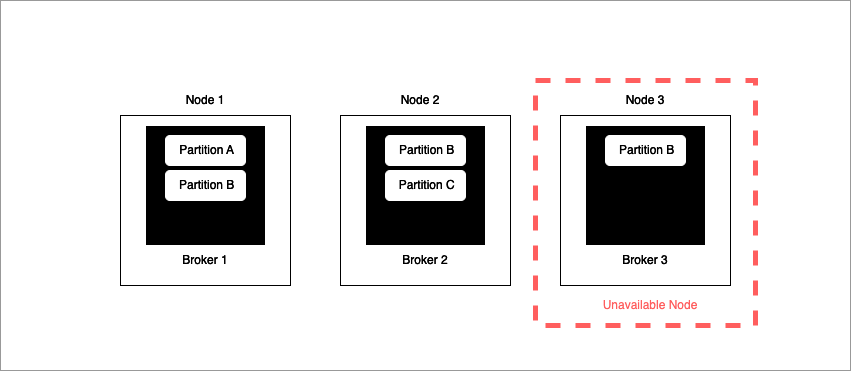

As you can see, the partitions are replicated to others kafka brokers, in the case of one of this brokers become unavailable.

Kafka under the hood has a partition leader that receive the new messages for this partition and after that it replicates the message to the others partitions in others brokers, this partitions is called followers.

In a scenario where a consumer needs to receive an event from a partition and the broker becomes unavailable, the consumer will still be able to receive this message because the replica is on another active broker.

How to know the exact number for Replication Factory

The number 3 is commonly used for this because guarantee broker loss and replica overhead, but if you want to define another number, in the internet you can find in documentations and articles the ideal number it is the equal number of brokers or one less than the number of brokers, example (RF = X ≤ Number of brokers).

I hope this articles clarify about Kafka Replication Factory, if you want to know more technical details you can consult the Confluence documentations.